docker 安装 zabbix, 添加主机,设置报警,性能调优。

监控模式

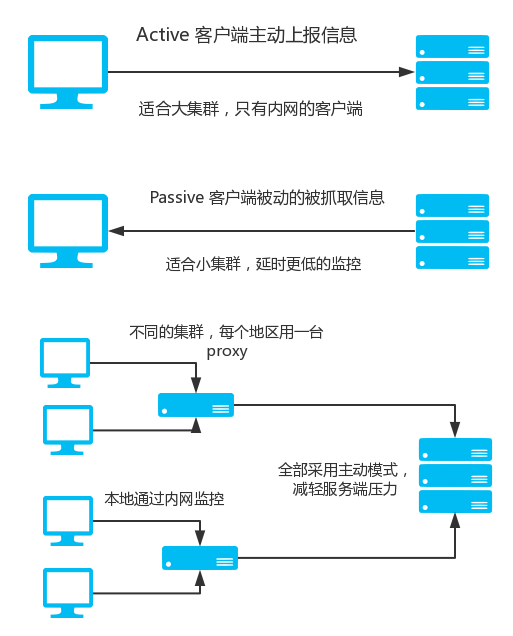

zabbix 有两种监控模式,主动和被动。在客户端与服务端之间还可以加一个 proxy,入下图所示

需要注意 iptables问题: 跨地区监控的时候, proxy 必须监听在所有网卡上。内网是为了和客户端通信,外网是为了和服务端通信。我曾试过 proxy 只监听在内网,因为是主动模式,层层上报信息,在 zabbix server 还是能发现 proxy 的存活。但是当我打算添加一台 host 时,却一直报错。原因就是 proxy 和 服务端是外网通信的,proxy 发包查询 host 的信息(监控项等),因为只监听内网,服务端的回包 proxy 无法获取,导致通信失败。

LAMP 架构安装

基于官方的 LAMP 架构,按照最简单的原生方式来部署,不做任何多余优化。

1 | # 安装必要依赖包 |

一切就绪,打开浏览器,输入 http://ServerName:port/zabbix

zabbix_server.conf 参数调优

1 | CacheSize=8G # Host 过多时,需要增大 CacheSize |

docker 搭建

1 | # install docker-ce |

然后是安装 zabbix 前端,后端,数据库。

1 | # 数据库。 |

1 | # 后端 参数已经调优 |

1 | # 前端 |

安装完成后,在浏览器打开 http://localhost:8080 默认的账户是 Admin 密码是 zabbix

ansible 批量添加主机

1 |

|

Action

设置触发警告的 Action 时,当 Step 设置为从 1 到 0 时,会一直发送告警信息,直到事件状态变成 OK,当 Step 设置为从 1 到 1 时,则只会发送一次告警,后面不会继续发送告警信息。

Zabbix 监控

监控网页状态

zabbix 自带的 Web monitoring 就可以进行简单的网页监控。目前官方的 zabbix 版本是 4.2 此时日期 2019-07-09

首先是找到一台机器 Go to Configuration → Hosts, pick a host and click on Web in the row of that host. Then click on Create web scenario. 详情请看官方文档,然后是添加报警,网页监控的官方文档也是介绍的非常详细。

具体的监控图表信息,可以在 zabbix 主页的 Monitoring -> Web 可以看到网页监控的详细信息。

监控 DNS

官方文档 4.2 版本

zabbix默认支持检查解析成功与否和具体的解析结果。对应内置的KEY

1 | net.dns[<ip>,zone,<type>,<timeout>,<count>] |

数据库表优化

1 | DELIMITER $$ |

Trends 120,(‘history’, 30, 24, 14), 最多保存 30 天的数据,每隔 24 小时生成一个分区,每次生成 14 个分区

首先进入容器内部,将上面这个 partition.sql 导入数据库 mysql

1 | mysql -uzabbix -pfeiyang@2019+ zabbix < partition.sql |

退出容器,在宿主机上,建立定时任务

1 | # vim /etc/crontab |

Zabbix api

1 | # auth_zabbix |

1 | # get hostid |

1 | # get hist_data |

1 | # get trend_data |

zabbix_get

从 server 端检测到 client 端的网络是否通畅,可能是 iptables 或者 server host 白名单造成的问题。

1 | zabbix_get -s 10.10.1.1 -k system.uname |

zabbix proxy

如果 server cluster 规模不大,则我们可以采用被动模式(对 client 而言是被动的,被 zabbix server来拉取数据)。如果集群规模大,那么 zabbix server 的压力就很大了,如果要去抓取的客户端数量过于庞大。 还有一种建议用 proxy 模式的情况是跨地区监控,集中到一台中心 zabbix, 方便管理。

本次演示,我们用了三台机器,client: 10.66.236.98 , proxy: 10.66.236.99 , zabbix_server: 10.66.236.100 (可用上文的 docker 方式安装),非 docker 方式可用参考 奥哥博客

- 首先是安装 zabbix proxy 在 10.66.236.99

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39# zabbix proxy 的依赖就只有数据库了,用于存储配置信息

yum install -y mariadb-server mariadb

# 启动 mariadb 服务

systemctl start mariadb.service

systemctl enable mariadb.service

# 初始化 mysql 数据库,并配置 root 用户密码

mysql_secure_installation

# 初始化,然后输入密码

# 创建 zabbix_proxy 数据库

mysql -uroot -p

mysql> create database zabbix_proxy character set utf8 collate utf8_bin;

mysql> grant all privileges on zabbix_proxy.* to zabbix@localhost identified by 'zabbix';

mysql> quit;

# 部署 zabbix_proxy 4.2 版本的

rpm -Uvh https://repo.zabbix.com/zabbix/4.2/rhel/7/x86_64/zabbix-release-4.2-2.el7.noarch.rpm

yum install -y zabbix-proxy-mysql

zcat /usr/share/doc/zabbix-proxy-mysql*/schema.sql.gz | mysql -uzabbix -p zabbix_proxy

#输入密码: zabbix

# 配置数据库用户及密码

vim /etc/zabbix/zabbix_proxy.conf

Server=10.66.236.100 #填写 zabbix server 的IP

Hostname=zabbix_proxy

DBName=zabbix_proxy

DBUser=zabbix

DBPassword=zabbix

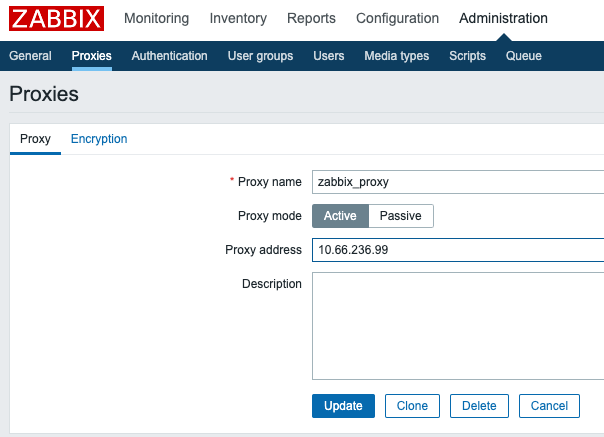

# 网页上配置 Zabbix Server Proxy

Administration -> Proxies -> Create proxy

Proxy name: zabbix_proxy

Proxy mode: Active

Proxy address: 10.66.236.99

# 启动 zabbix_proxy

systemctl restart zabbix-proxy

如果是 Active 模式,这里不需要填写 IP, Passive 被动模式才一定要填写 IP

修改 client 端的配置

1

2

3

4

5

6

7# 修改 zabbix_agent 配置指向 zabbix_proxy

vim /etc/zabbix/zabbix_agentd.conf

ServerActive=10.66.236.99 #proxy 的 IP

Hostname=10.66.236.98 #本机的 IP

# 重启 zabbix_agent

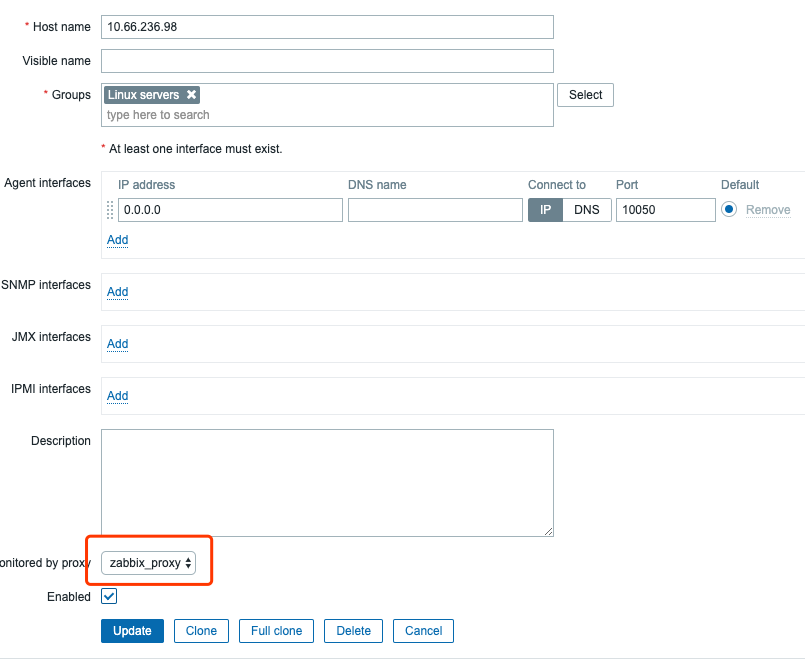

systemctl restart zabbix-agent在网页上添加 host

一定要选择 proxy,IP 就填写 0.0.0.0 还有一个非常重要的就是 template 所有的 item 需要采用 Zabbix agent (active)

遇到的坑

- 在测试 proxy 时,host name 不匹配造成的,我最开始随便填了一个 hostname , 然后发现和 agent config 不一致,又在zabbix 网页上更新为 10.66.236.98 ,感觉数据库并没有更新,所以导致 host name 匹配不了,一直报错 no active checks on server no active checks on server [10.66.236.99:10051]: host [10.66.236.98] not found

解决的办法是:重新删除了 host , 再添加上 ,一次性填对了 host name 10.66.236.98 就可以了